FPGA VS GPU

What processing units should you use, and what are their differences? In this blog post Haltian’s Senior Software Specialist, Jyrki Leskelä, compares two common processors: FPGA and GPU.

When designing a complex electronic device, such as a scientific camera, one of the first tasks is to select the processing units, i.e. processors, you want to use.

Nowadays, there are several types of processors, but when discussing image processing or other heavy calculations, the following three are the main ones: Central Processing Unit (CPU), Graphics Processing Unit (GPU), and Field Programmable Gate Array (FPGA).

The distinction between these three is easy. In principle, you could compute everything with CPU, but the other processor types are faster or more energy-efficient with specific applications. To understand why, it’s good to know how the GPU and FPGA came into existence.

This article will go through the history of the different processing units, explain the differences between them, and finally compare them by different application areas.

History

The history of GPU can be tracked from specialized graphics cards of 70s arcade games to 2D blitters in the 80s, all the way to the fixed function 3D cards in the 90s.

The real boom of programmable GPU started in the late 2000s due to the need for configurable light shading and more dynamic 3D geometry programming. At the time the full programmability was a matter of programming language specification and incremental processor innovation. It’s no wonder that workloads that resemble computer graphics or physical world simulation suit this type of processor so well. The innovation has not stopped though, a very recent trend is the addition of tensor cores to further speed up the deep learning use cases.

The FPGA was born in the 80s as a relatively simple re-programmable glue logic device. As the number of logic ports increased in the 90s, the FPGA became widely used in for Telecommunications applications. A new boom of high-performance computing with FPGA started in the 2010s, partially due to hybrid FPGA chips that fuse in CPU cores together with the huge arrays field-programmable logic blocks.

Practical perspective to modern GPU vs. FPGA

From a systems design perspective, the modern FPGA is an entirely different beast than GPU, though it’s possible to apply either one for similar tasks in some cases. For example, the FPGA can be configured to directly access hardware I/O without the latency of internal bus structures common to general-purpose processors. The processing functionality of FPGA can be customized at logic port level, while the GPU can only be programmed using its static vectored instruction set. This difference makes FPGA more responsive and allows more dedicated special-purpose innovation.

While GPU does not have such a fast latency, its performance should not be under-estimated. The trick is in the brute-force throughput: a typical GPU can execute hundreds or thousands of arithmetic operations in parallel. This is not as easy as it sounds: the speedup figures can only be achieved with uniform computation, i.e. running the same operation for a wide array of data elements. This is a double-edged sword though since it’s quite difficult to find fast performing algorithms to all the necessary problem domains. But when found, they do run super-fast.

There is one aspect of GPU that makes it a bit clumsy compared to FPGA. GPU is typically connected as a co-processor of CPU so its connectivity to elsewhere in the system is quite restricted. A modern nVidia GPU can connect to system RAM, display frame buffer and, in some cases, the frame grabbing device (camera).

More from Jyrki Leskelä: How to design modern camera equipment

For any practical use case, it requires assistance from the CPU. This differs a lot from the FPGA that can be connected to exotic hardware interfaces with real-time diagnostic logic and iterative feedback loops without problems.

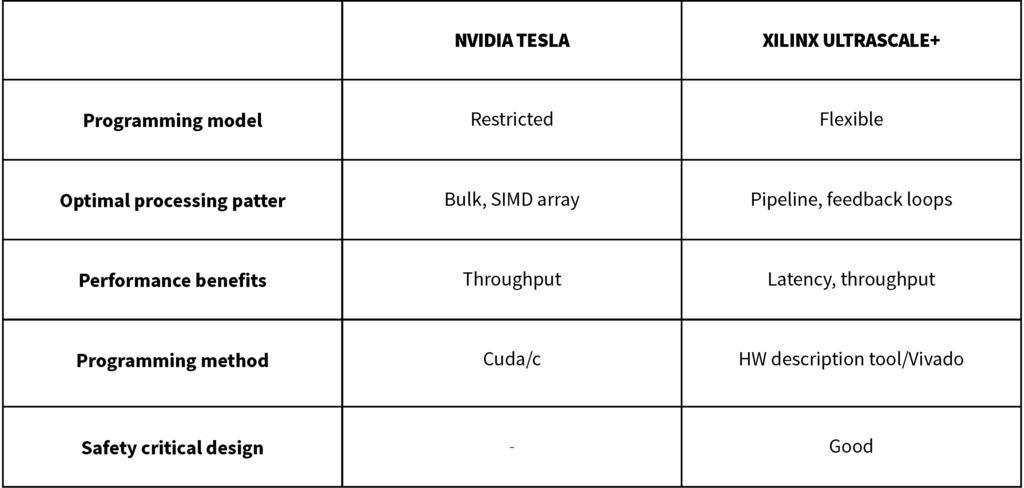

In table 1 you can see the comparison between two recent processors: nVidia Tesla P40 GPU and Xilinx Ultrascale+ TM XCVU13P FPGA [1] [2]. Both are capable of near 40 tera-operations / second (8-bit integer).

As you can see from the table, even though the performance level is the same, they are different in almost every other aspect.

What you cannot see from the table is energy efficiency, which is not symmetric either. The FPGA consumes less energy with integer computation and logic. GPU architecture, on the other hand, is streamlined for bulk floating-point processing.

Another important aspect is the engineering effort needed to create features with these technologies. GPU usage requires only SW programming skills, while the FPGA requires some HW definition expertise as well. With GPU, all the low-level instructions are ready-made and tested for you. With FPGA, you have to suffer through a lengthy verification phase for the newly created digital logic. It is safe to say that the FPGA application is usually more labor-intensive.

Comparison by application

Both GPU and FPGA are established technologies with several well-known application areas. Here is a listing of some of the known applications for both.

FPGA applications, Xilinx [3]

- Hardware development

- Old hardware emulation

- Real-time data acquisition

- Real-time DSP / image processing

- Robotics/Motion control

- Connecting to proprietary interfaces

- Custom SoC

GPU applications, Nvidia [4]

- Graphics processing

- Computer vision

- Bioinformatics: Sequencing and protein docking

- Computational Finance: Monte Carlo simulations

- Data Intelligence: NPP and CuFFTs

- Machine Learning / Big Data processing

- Weather: fluid dynamics: Navier-Stokes equations

The comparison by application area is not as simple as it sounds. These are broad use-cases and you cannot be certain that the established choice is necessarily the best one. It is better to look at some of the applications a little bit deeper.

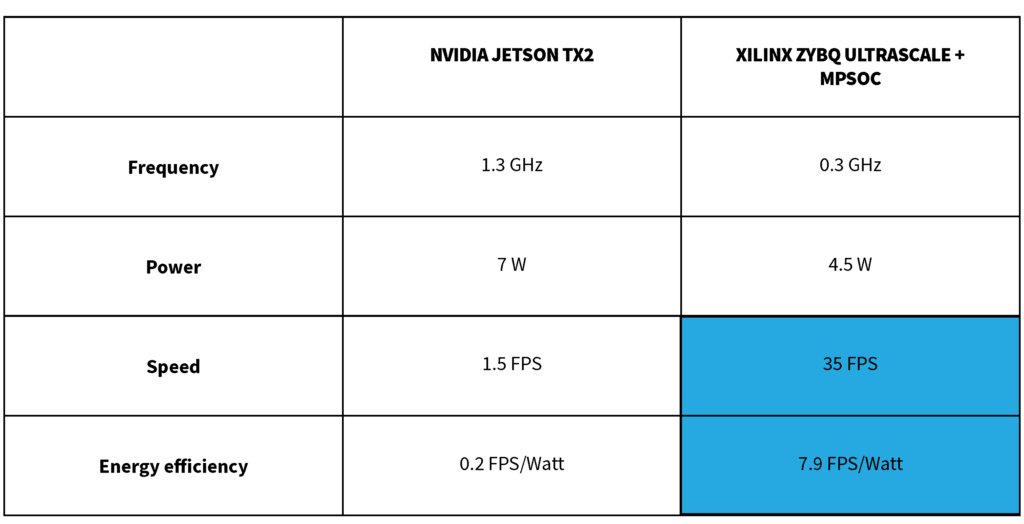

Image recognition is an important part of any computer vision system. Well documented implementations exist for several GPU’s and FPGA’s. For example, Nakahara, Shimoda and Sato [5] compared the nVidia Jetson TX2 GPU against the Xilinx Zynq UltraScale+ MPSoC FPGA using YOLO v2 algorithm as a benchmark. They found out that the FPGA was superior both in the speed and power efficiency, see table 2.

This is not the only comparison to show similar results. The convolutional Neural Net (CNN) algorithm for image recognition implemented on a ten-dollar ZYNQ 7020 chip [6] was shown to be 30 times faster than previous GPU algorithm.

The difference between GPU and FPGA performance is not a static factor, but it does depend on the size of the data set. A study by Sanaullah and Herbordt [7] revealed that FPGA can compute small samples of 3D FFT tens of times faster than GPU. The difference is less clear when the data set gets bigger.

In addition to the size, the structure of the data and computation is also significant. FPGA is known to be excellent in line-by-line image processing while GPU is optimal in handling big texture frames at a time. GPU can use big intermediate RAM buffers easily, and the excellent interoperation with the main CPU makes it great for complex computation. One benefit of GPU is the bigger selection of ready-made software libraries and applications.

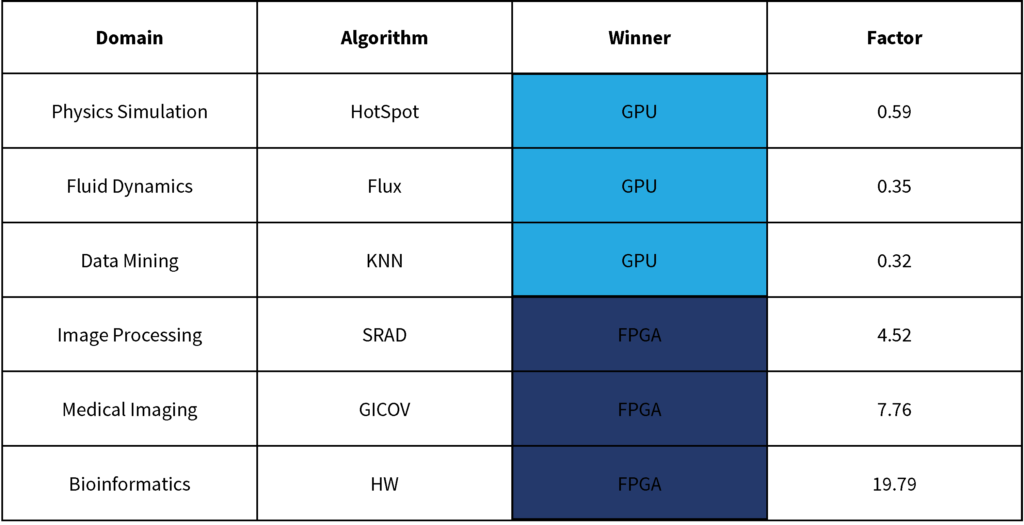

The University of California, Los Angeles (UCLA) and Xilinx studied the FPGA/GPU differences by diligently porting various computing kernels to Xilinx Virtex 7 FPGA and 28nm Nvidia K40c GPU [8]. Table 3 shows the winners:

So, what processors should you use?

As a summary, it is not that difficult to select what processor types to use in your device. You just need to know the key features and applications. CPU engineering cost is lowest, so if there is no need to use an accelerator, then use the CPU.

When it’s necessary to get a boost of speed for some feature, check first if it’s possible to apply GPU. It might be faster than the CPU and the engineering effort might be a reasonable compromise. This is true especially if you can apply some of the ready-made GPU libraries.

As a last resort, consider the FPGA. There are things you just cannot do with GPU: immediate high-latency processing of data from a special hardware interface and real-time reactions based on the received data. FPGA is meant for those cases – when CPU or GPU is just not enough.

Just remember: it’s completely okay to spread the work to all these processing elements. For example, let the FPGA to carry over the front-end of the data pipeline, GPU to handle the later phases, and CPU to orchestrate the overall control of the system.